by Anneliese Poetz, KT Manager, NeuroDevNet

The KT Core provides services for evaluation mainly for KT events and KT products. Did you know that REDCap can be used for collecting evaluation/survey data? As a NeuroDevNet researcher or trainee, you have free access to REDCap using your login and password. Use REDCap for informing your research and/or for KT evaluation purposes.

What kind of surveys would you want to use REDCap for?

- Data collection for research

- Evaluation of your KT event (either in-person or webinar)

- Evaluation of your KT product (summary, infographic, video, etc.)

- Needs assessment for informing capacity building, research grant applications, etc.

- Any survey you would normally use survey monkey, zoho, or any other free survey maker for

Example: I used REDCap recently to conduct a needs assessment for an upcoming workshop on evaluation of knowledge translation. I used a mix of multiple choice and open-ended questions, and for one of the questions I used the “slider”. I wanted to know how attendees rated their ability to do KT evaluation prior to the workshop, so by choosing the “slider” type of question I was able to give survey respondents the ability to slide a horizontal bar between “0” on one end and “100” on another end, sort of like a visual analog scale. The data from these responses is represented as a scatter plot in the data report. It’s a neat feature I have never seen in any survey software before. I customized a report of the open-ended text-based answers as well as a separate report that gave me either bar charts or pie charts showing the data from the multiple choice responses. This was very useful data for informing our approach to the workshop.

What are the benefits of using REDCap for surveys?

- Has the same features as the paid-version of survey monkey

- Data is housed in Canada, by NeuroDevNet Neuroinformatics Core, not in the United States like Survey Monkey

- Can export your data into SPSS, SAS, R, MS Excel for analysis, or as a .csv file

- Can customize reports for data export so you can visually see trends (e.g. a bar or pie chart for multiple choice answers, text entries for open ended questions)

- Can enter email addresses of respondents directly into the survey, so you can track survey responses by user

How can you get started using REDCap?

- Go to: https://neurodevnet.med.ualberta.ca/

- Enter your NeuroDevNet username and password

- Watch tutorial videos if needed, to learn how to use REDCap to develop your survey

- Enter your questions, and design the survey using multiple choice options or open-ended text-based answers

- Work with Neuroinformatics (Justin Leong, jleong [at] neurodevnet.ca) to finalize and launch your survey

- Retrieve your data (using export/report options listed above)

Contact the KT Core if you’d like help drafting evaluation questions. Here is a step-by-step example of how you can create your own survey in REDCap:

Step 1: Log into REDCap

Step 2: In this window, you will see surveys you have already created. You can click on an existing “Project Title” in the list, or to create a new survey click on the tab “Create New Project”.

Step 3: When you click on “Create New Project” tab, you will see the following screen. Type in a title for your survey, choose an appropriate “purpose” for your survey (I usually choose “Quality Improvement”) and I usually leave the default choice to start the project from scratch. Then scroll to the bottom of the page and click the button “Create Project”.

Step 4: Set up your survey (project). Click “enable” for “Use surveys in this project?” and then click on it to open a page that will allow you to upload a logo/photo and edit a message to your survey participants (such as ethics agreement if the survey is for research, an introduction/overview of the survey if you are doing a needs assessment or evaluation, information about how the data will be used etc.). When you are done, click the “I’m done!” button. To design your survey, click the “Online Designer” button and “I’m done!”.

Step 5: Design your survey. You will see an entry under “Instrument Name” that is called “My First Instrument”. Click on the “edit” button to edit the title of this instrument. If you don’t, and you create a new survey (if you “add new instrument”) you will have problems later that you will have to contact Justin to sort out. After you are done editing the title to something more meaningful (can be the same title as your project title) click “save”.

Step 6: Click on the “Instrument name” title you just edited. Click “Add Field” to add your first question to your survey.

Step 7: Design your survey questions. Choose the type of field you want, for example, text box, multiple choice, true/false, slider (visual analog scale), etc. The slider is great if you want to ask your survey respondents to rate something based on how they felt about it. It can give you more precise information than a scale from 1-10. For example, you can ask “how would you rate your knowledge about XYZ after this workshop?” and give them a scale from 1-100. Text boxes are good for open-ended questions, and multiple choice can be either “choose one only” or “choose multiple”.

Step 8: Create your questions. The example below shows how you would create a multiple choice question. Type the question you want to ask into “Field Label”. Type the choices for your multiple choice question in the box called “Choices” but don’t type a number, just type one choice per line. The numbers will be added automatically by REDCap. Type in a meaningful name for the variable, so when you view the report of your data you’ll know which one it is. I usually type “_text” at the end of answers that are open-ended text based answers so that I can create one report for the text-based answers, and another for the report-based answers. Choose “yes” or “no” whether you want the question to be mandatory for the user to answer – the default is “no”. Accept the defaults for everything else and click “save”.

This is what the question looks like:

Step 9: When you are done creating questions for your survey, click the “Project Setup” tab. Click “I’m done!” for the “Design your data collection instruments” item. Accept the default values and click “I’m done!” for the next 3 items “Enable optional modules and customizations”, “Set up project bookmarks” and “User Rights and Permissions”. The next item asks you to test the instrument thoroughly before entering “Production” mode. Once you enter “Production” you will be limited in terms of what you can edit/change.

When you are done testing (and clicked “I’m done!”) click the button that says “Move project to production” to get the survey link that you can send to participants by copying/pasting the link into the body of an email.

When you are done testing (and clicked “I’m done!”) click the button that says “Move project to production” to get the survey link that you can send to participants by copying/pasting the link into the body of an email.

Step 10: Get your survey link so you can send it in the body of an email to your participants (for anonymous survey data collection). You may have to contact Justin for help with this. If you want to try it on your own, look under the “Data Collection” heading on the left hand side of the project page, and click “Manage Survey Participants”. Then you should be able to copy/paste the link for your survey into an email or into a tweet or other social media post. Note: you have to have enabled the survey (step 4) in order to get the link for your survey.

This blog post is not exhaustive in terms of REDCap’s survey functionality. You can also create custom reports so that as people fill out your survey, you can look at the data in a way that makes it easiest for you. For example, I usually create 2 reports: one for the text-based/open-ended answers and one for the multiple choice answers that are usually bar or pie-charts. It’s up to you.

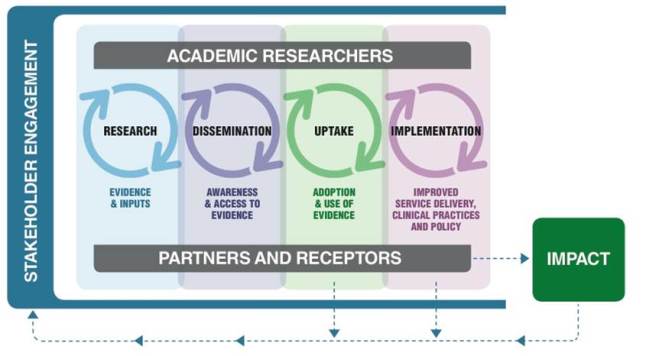

REDCap is a great tool for surveys. You may have used it for research-based surveys, but you may not have thought about using it for conducting needs assessments before conducting a workshop, course or presentation or to assess your end-users’ needs before designing your KT products (summaries, infographics, videos, etc.) for evaluating an event (in-person workshop, stakeholder engagement event, or conference, or a webinar) or your KT products (survey your end-users to find out how they have used your KT Product, and what impact it may have had for informing practice or policy).

REDCap is a great tool for surveys. You may have used it for research-based surveys, but you may not have thought about using it for conducting needs assessments before conducting a workshop, course or presentation or to assess your end-users’ needs before designing your KT products (summaries, infographics, videos, etc.) for evaluating an event (in-person workshop, stakeholder engagement event, or conference, or a webinar) or your KT products (survey your end-users to find out how they have used your KT Product, and what impact it may have had for informing practice or policy).

If you are a NeuroDevNet researcher or trainee and would like help with evaluation of your events and/or KT activities, contact the KT Core. If you need technical assistance setting up a REDCap survey, contact Justin Leong (jleong [at] neurodevnet.ca) from NeuroDevNet’s Neuroinformatics Core.